Week 1 — Neural Networks and Deep Learning

Notations (source) :

Week 1 — Introduction to Deep Learning

May 1, 2021

Welcome

- AI (Artificial Intelligence) is the new Electricity to bring about a change and a new era

- Parts of this course:

- Neural Networks and Deep Learning. → Recognizing cats

- Improving Deep Neural Networks: Hyperparmeter tuning, Regularization and Optimization

- Structuring your Machine Learning Project → Best Practices for project

- Convolutional Neural Networks

- Natural Language Processing: Building sequence models

Introduction to Deep Learning

What Deep Learning/ML is good for

-

Problems with long lists of rules: When the traditional approach fails, machine learning/deep leanring may help

-

Continually changing environments: Deep Learning can adapt ('lear') to new scenarios

-

Discovering insights withing large collections of data: Imaging trying to hand-craft rules for what 101 different kinds of food look like?

Where Deep Learning is (typically) not good?

- When you need explainability: the patterns learned by a deep learnign model are typically uninterpretable by human

- When the traditional approach is a better option: if you can accomplish what you need with a simple rule-based system.

- When errors are unacceptable: since the outputs of deep learning aren't always predictable. The outputs are probabilistic and not deterministic.

- When you don't have much data: deep learning models usually require a fairly large amount of data to produce great results.

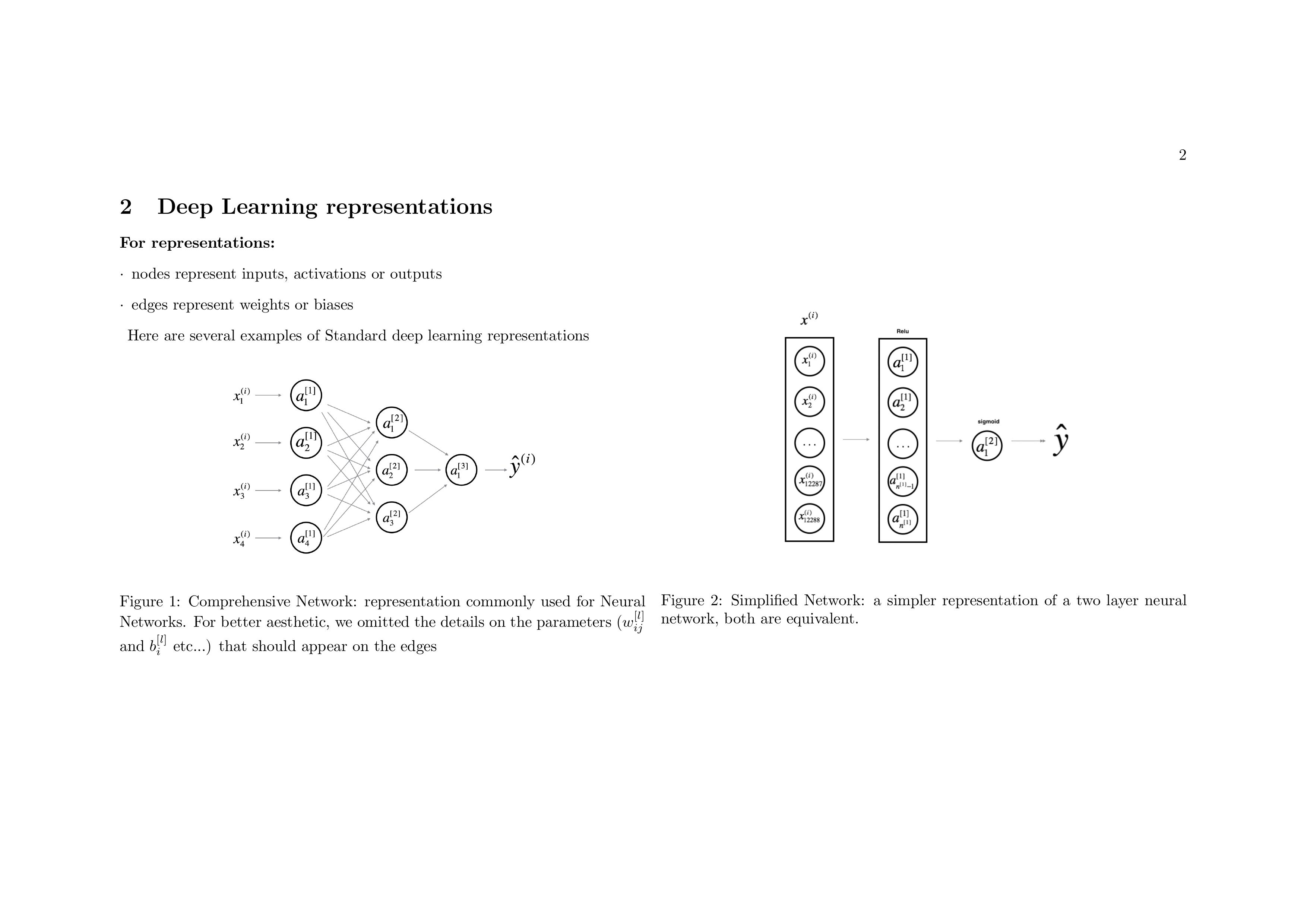

What is neural networks?

Neural networks are created by structuring layers, with each layer consisting of "neurons" which get activated depending on its activation function and the input.

Supervised Learning with Neural Networks

Supervised learning refers to problems when we have inputs as well as labels (outputs) to predict using machine learning techniques. Then the models figure out the mapping from inputs to output.

The data can be:

- Structured Data (tables)

- Unstructured Data (images, audio, etc.)

Why is Deep Learning taking off?

Three reasons:

- Backpropagation algorithm

- Glorot and He initialization

- ReLU (Rectified Linear Unit) activation function

Other reasons:

- Data

- Computation power

- Algorithms

The scale at which we creating data is also important, as neural networks performance don't stagnant unlike traditional machine learning algorithms

In this course $m$ denotes no of training examples.

About this Course

- Week 1: Introduction

- Week 2: Basics of Neural Network programming

- Week 3: One hidden layer Neural Networks

- Week 4: Deep Neural Networks

Info

Any image used here for illustration if not mentioned, is attributed to Andrew Ng's lecture slides.